During its Testnet phase Allora has seen a wide variety of prediction topics, including predictions of prices, log-returns, and price volatility. Throughout, the main thesis of the Allora whitepaper and subsequent research (accessible on the Allora Research Forum and our scholarly research journal Allora Decentralized Intelligence) has been validated in practice: Allora is a self-improving, decentralized machine intelligence network that offers predictive alpha relative to gold-standard oracles.

Here we provide a short Testnet performance retrospective by discussing a couple of highlights that revolve around the following two concrete points:

- Allora's price predictions provide a statistically highly significant and profitable directional accuracy improvement;

- Allora's unique model performance forecasting role enables a form of context-awareness (which model is expected to perform best right now?) that unlocks regime-dependent accuracy optimization.

These accomplishments are particularly noteworthy given that (especially short-timeframe) price prediction is among the hardest machine learning problems in existence, due to its notoriously low signal-to-noise.

1) Allora's price predictions provide a statistically highly significant directional accuracy improvement

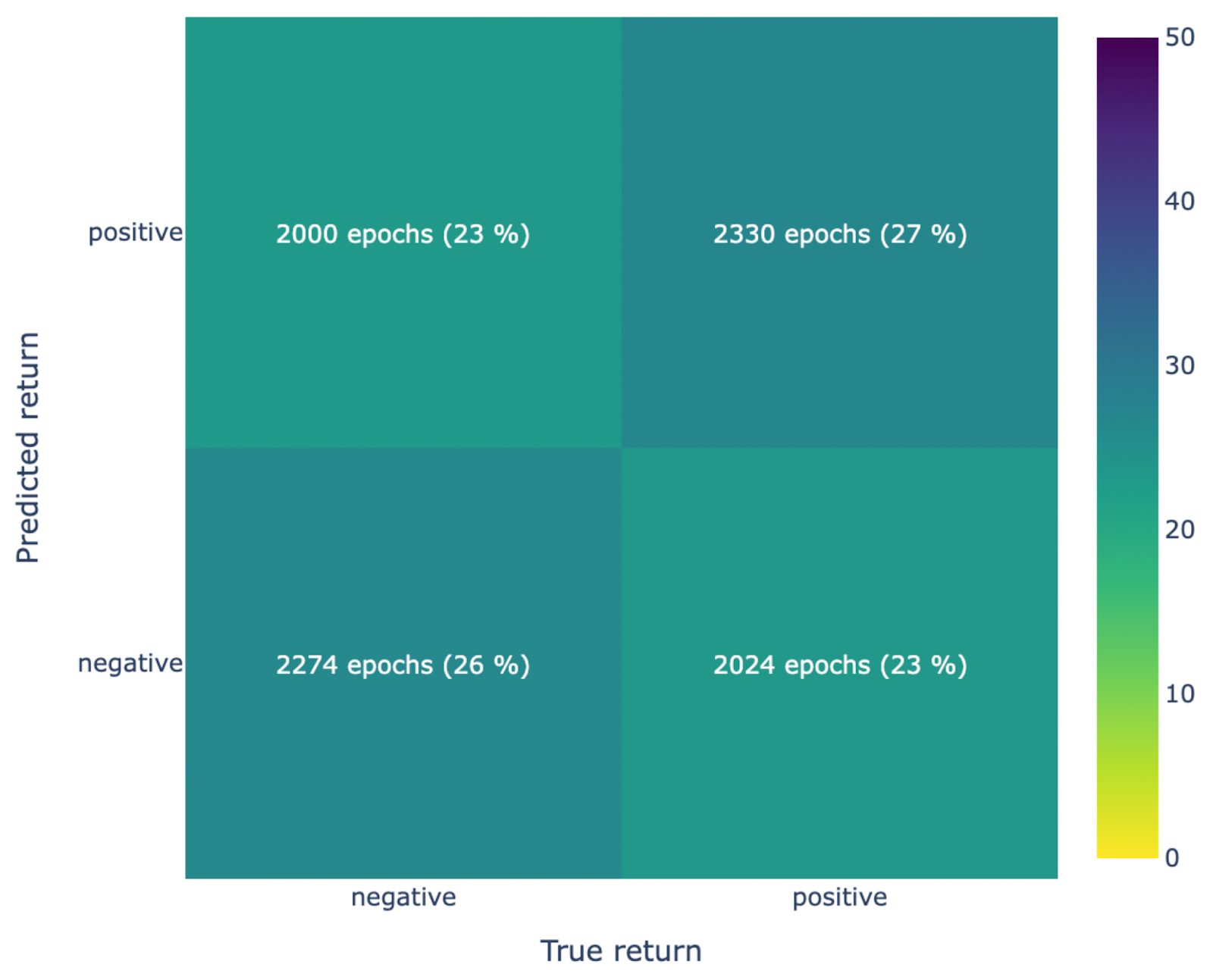

We will start by discussing an example from a 5-minute BTC price prediction topic that has been running for several months on the network. Shown here are the aggregate results over the last month or so, corresponding to 10,000 predictions.

In the first figure, we show a comparison between predicted log-returns, i.e. ln(future_price/current_price), and the actual log-returns over the 5-minute timeframe considered in this topic (“Ground truth”). Log-returns predictions represent the gold standard in financial market forecasting, because they focus on the difference between current price and imminent price. Any signal here (no matter how tiny) provides predictive alpha. The figure below contains such signal, as we quantify below.

The data exhibit a distinctly positive correlation (Pearson r = 0.09) at high statistical significance (p = 5.5e-16). As illustrated in the next figure, the directional accuracy of the predictions is high too, at 53.22% with a 95% confidence interval of 52.16%–54.28%.

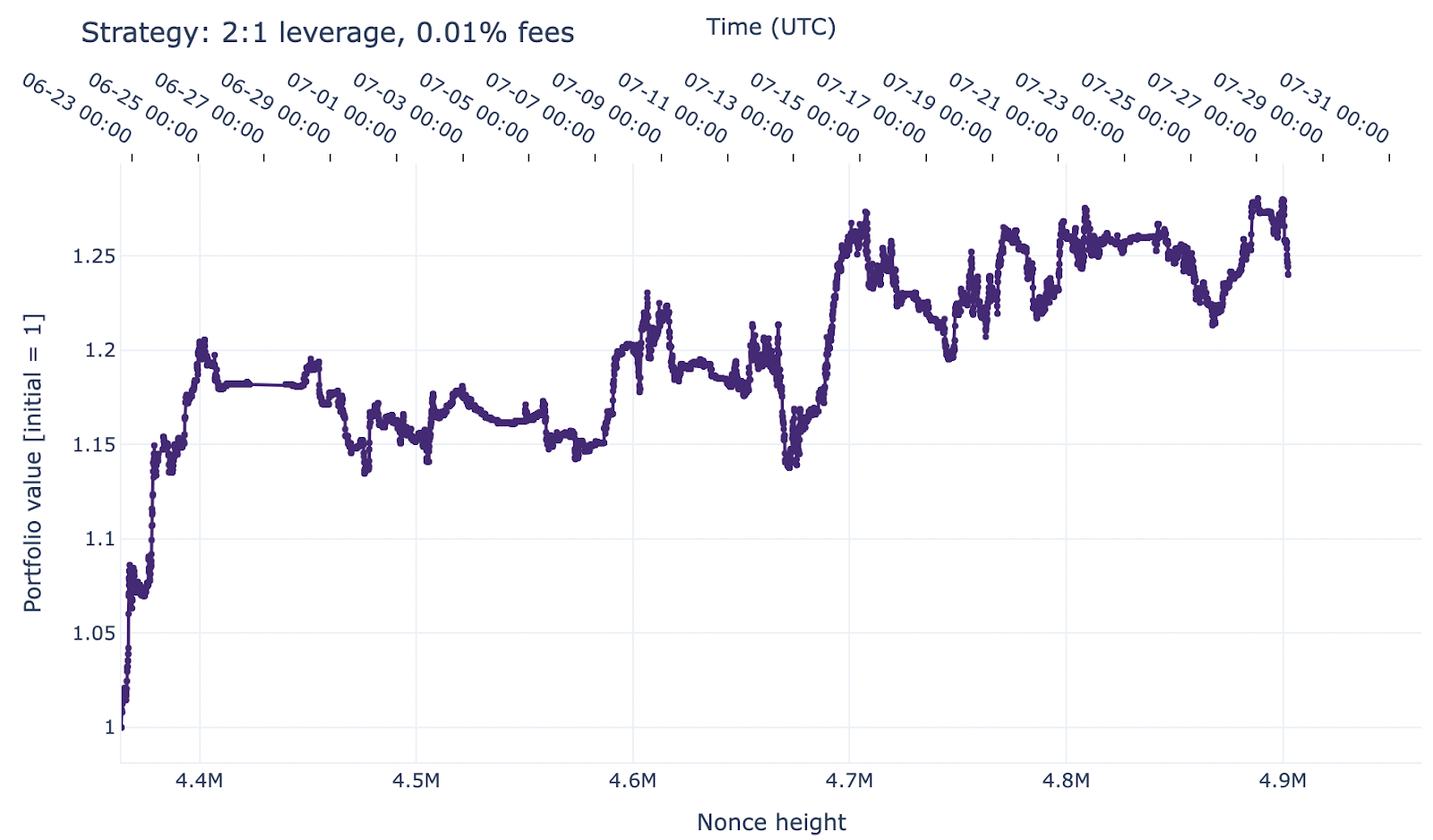

The positive directional accuracy means that trading strategies based on these predictions generate excess yield. For the exact 10,000 predictions within one month covered by these data (characterized by an approximate price volatility of 0.1% per 5-minute interval), an Allora-informed, high-frequency long/short strategy with low leverage (2:1), trading any predicted return in excess of ±0.001, would have generated an MPY of 147.9% (without accounting for transaction costs). Accounting for realistic transaction costs (0.01% maker fee on Hyperliquid) would reduce this yield to an MPY of 24.40%, equivalent to an APY of 1273%. The figure shown below illustrates the portfolio growth for this strategy.

The accuracy of Allora’s predictions underpinning this trading strategy is not unique within the ecosystem. During Testnet, Allora has achieved similar accuracies for assets and timeframes other than 5-minute BTC predictions. Some examples include 5-minute ETH (51.79%; CI 50.74%–52.84%), 5-minute SOL (51.73%; CI 50.69%–52.76%), 1-day BTC (58.78%; CI 56.01%–61.51%), and 1-day ETH (56.50%; CI 53.72%–59.25%).

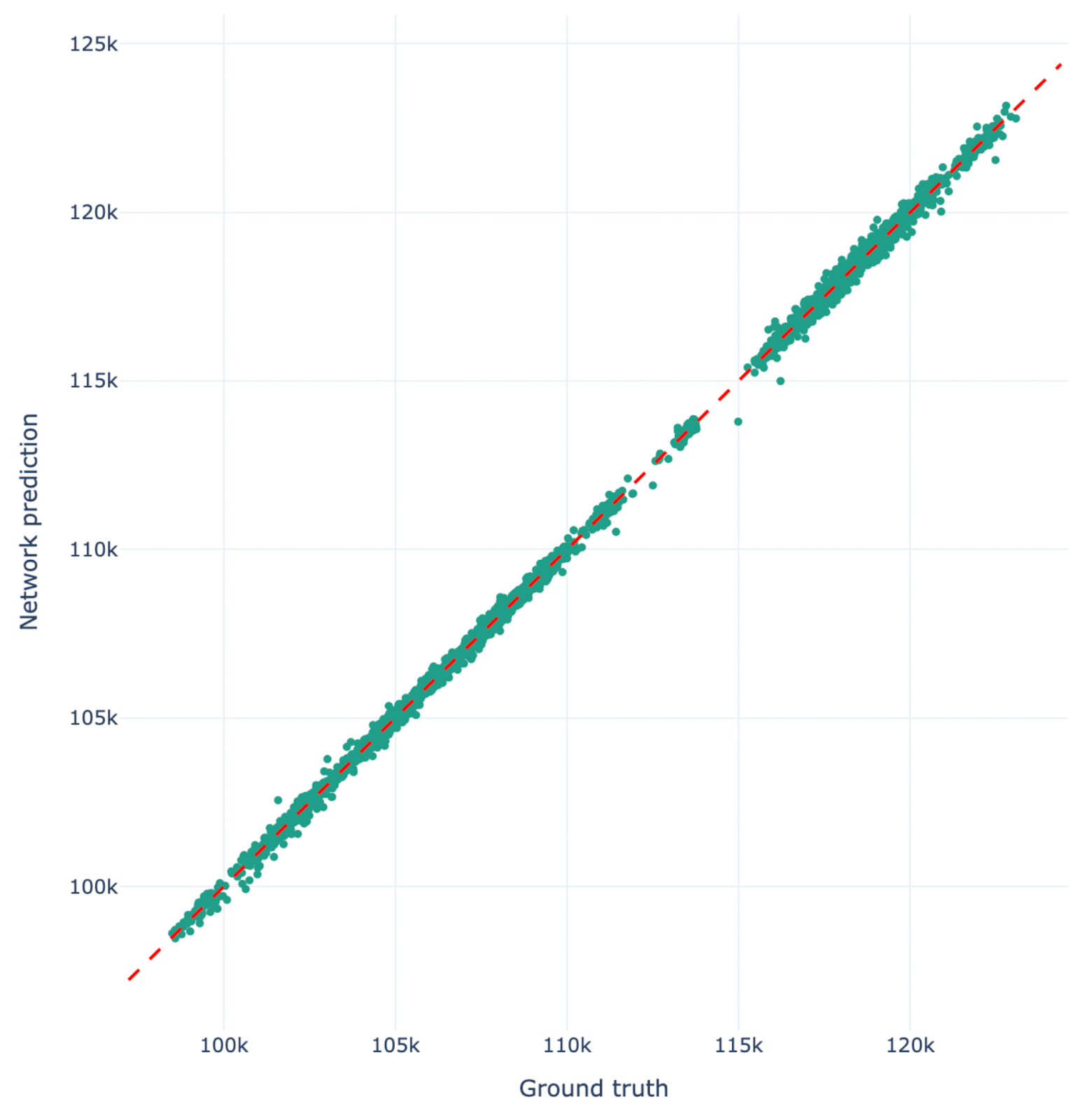

If we combine the above log-returns predictions with knowledge of the current price, we obtain the following relation between the predicted price of BTC and its actual price (“Ground truth”) in 5 minutes from the moment of predicting:

While this looks like a very strong correlation, it is important to realize that such a figure mostly reflects price auto-correlation. The predictive alpha is in the preceding analysis of the log-returns.

As stated before, price prediction is one of the hardest problems in machine learning due to the intrinsic feedback loop that exists in financial markets. Anything easy to predict is factored in immediately. Despite this challenge, Allora demonstrably captures real signal, which generates statistically risk-free true yield, in this example reaching over 1000% APY after fees. Allora accomplishes this even during its Testnet phase, where real financial incentives are still lacking. Post mainnet-launch, we foresee considerably higher-quality participation, which will drive even higher performance.

2) Allora's context-aware performance forecasting unlocks regime-dependent accuracy optimization

We continue by validating how Allora’s unique forecaster role boosts network accuracy and performance. Next to inference workers (which provide inferences for the target variable of a topic), Allora introduces the concept of forecasting workers (or forecasters). Forecasters are machine learning models that predict the performance of the inference workers in the current context, by training on their past contextual performance data. This role allows Allora’s Inference Synthesis mechanism to incorporate the latest contextual information into the network inference: it is what makes the network context-aware.

Through its context-awareness, Allora is capable of considering under which conditions specific inference workers are expected to outperform, and thus adjust their weight in the network inference calculation accordingly.

Major tests and development of Allora’s forecaster model have been documented publicly on our Research Forum. Here we highlight a key manifestation of the forecaster’s functionality running live on the network.

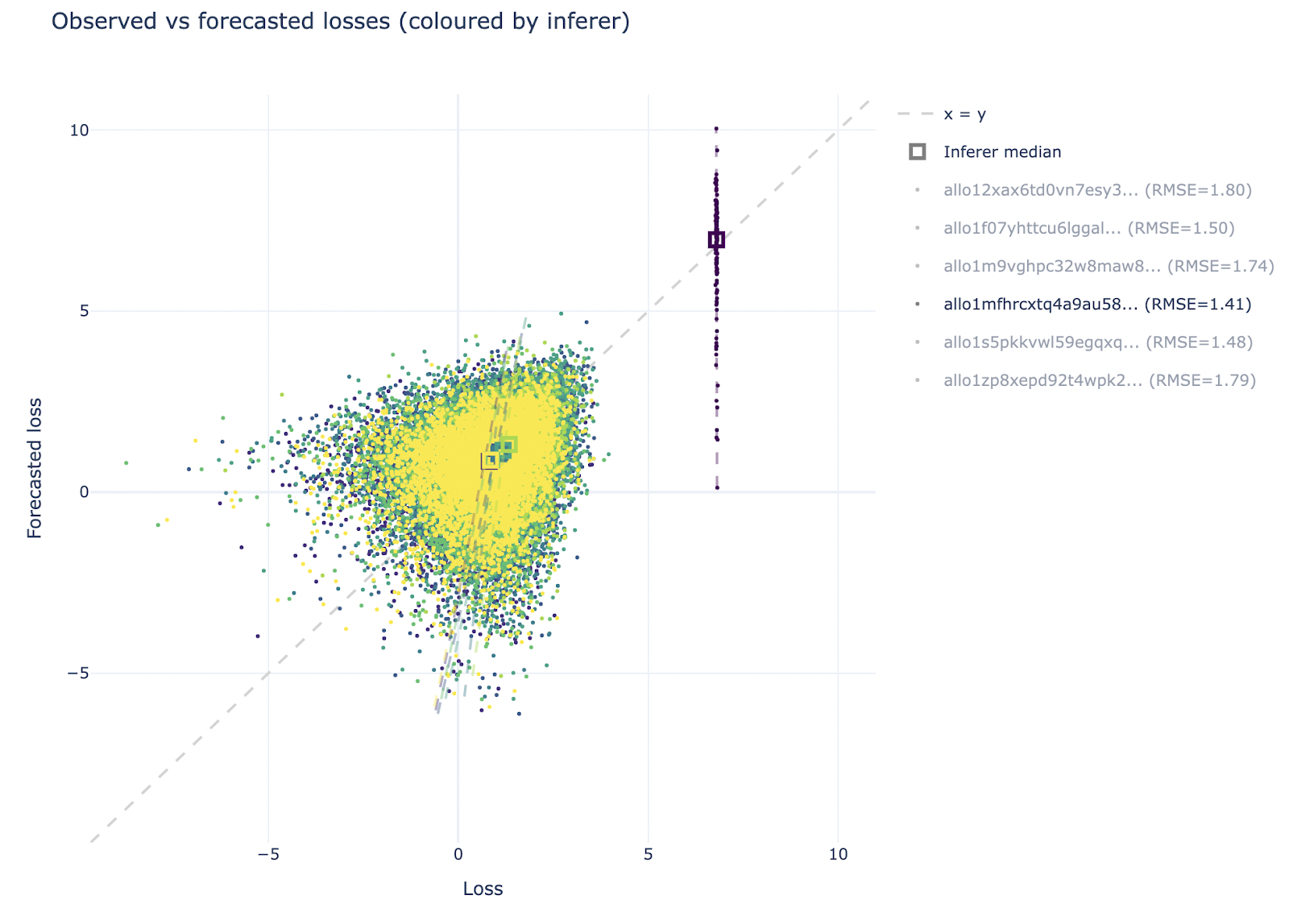

In the figure below, we show how the loss (performance) of the inference workers as predicted by a single forecaster (y-axis) compares to their true loss (x-axis). Each color corresponds to a different inference worker. For each inference worker, a square shows the median and a dashed line shows a linear regression to the data points.

This figure has two key take-home points. First, there is a single inference worker (in the top right part of the diagram) which has a considerably higher loss than the other inference workers, i.e. it performs much worse. The forecaster correctly identifies this and systematically attributes an elevated loss to this inference worker. As a result, Allora assigns a negligible weight to the inferences produced by this worker.

Secondly, the dashed lines have a positive slope, which indicates a positive correlation between forecasted losses and true losses. This means that, for each of the individual inference workers, this forecaster is capable of predicting under which conditions their performance is likely to be higher or lower than their average. This is precisely the context-awareness that the forecaster role was designed for. By considering this context-dependent performance in concert across all inference workers, Allora synthesizes a network inference that is optimal in the current context.

Summary

During Testnet, Allora has been supporting a wide variety of price prediction, log-returns prediction, and volatility prediction topics. Across these topics, it has systematically generated predictive alpha.

In this blog, we considered a case study of 10,000 5-minute BTC price predictions (spanning approximately one month), across which Allora achieved a statistically significant Pearson correlation of 0.09 with ground-truth log-returns (p = 5.5e-16). Directional accuracy was 53.22% (95% CI: 52.16–54.28%). A simple 2:1 long/short strategy on these signals would have produced 147.9% MPY before fees and 24.40% MPY (=1273% APY) after a 0.01% maker fee on Hyperliquid.

These results deliver meaningful trading signals relative to gold-standard oracles and, more importantly, validate Allora’s core mechanism: forecasters that predict each worker’s current loss allow the network to weigh models by expected regime performance. In live topics, forecasters correctly down-weighted chronically underperforming workers and captured positive correlations between predicted and realized losses across individuals, enabling context-aware inference synthesis.

The main thesis of Allora’s whitepaper, i.e. that a self-improving, decentralized machine intelligence can outperform any single participant by design through differentiated incentives and context-aware Inference Synthesis, has now been demonstrated in practice. Workers are rewarded on unique contribution, reputers on stake-weighted consensus, and the network combines inferences and forecast-implied inferences to minimize loss. The result is a system that already beats baseline accuracy on one of the hardest machine learning problems: short-horizon price prediction.

In conclusion, the Allora Testnet has demonstrated that the architecture works, the economics reward quality over noise, and performance should improve as mainnet incentives attract higher-caliber models and data. The immediate opportunity is to drive topic expansion (also beyond token price prediction), deepen inference worker and forecasting worker diversity, and scale consumer demand for paid inferences. Each of these is expected to directly drive fee revenue and token utility.

Crucially, these metrics were achieved without real financial incentives; the Allora Testnet still lacked production-level rewards. The mainnet launch will tighten feedback loops, raise data/model quality, and let fees offset emissions, preserving APY for stakers while simultaneously funding growth. That way, it presents an attractive ecosystem and a compounding flywheel for early participants.

.jpg)