Machine learning engineers often wrestle with fragmented workflows: training pipelines separated from deployment, mismatched evaluation environments, and hidden bugs that surface only in production. This friction slows iteration and blocks contributors from turning their intelligence into live, contributing models.

The Allora Forge Builder Kit solves this. By abstracting away the operational complexity of ML and blockchain, it lets machine learning engineers focus on what matters: building and refining models. Now, in a single notebook, engineers can go from dataset to live predictions delivered to the Allora Network in a matter of minutes.

What the Kit Enables

At its core, the Forge Builder Kit aligns with Allora’s role as the Intelligence Layer: an open coordination network where contributors bring models, and the system dynamically routes, evaluates, and rewards intelligence. With this kit, an engineer's workflow is no longer patching together a bunch of custom scripts. It is now one seamless path to begin contributing predictive intelligence onchain.

With the Builder Kit engineers can:

- Deploy quickly: move from dataset to live predictions in minutes.

- Stay consistent: use the same functions for both training and live inference, avoiding rewrites and silent errors.

- Work how you want: extend the baseline LightGBM workflow with custom features, models, or pipelines.

Walkthrough: From Data to Live Predictions

The Forge Builder Kit notebook walks through the exact steps required to train and deploy on Allora.

Developers who prefer working in Colab can also launch the Notebook here.

Before getting started, be sure to grab a free Allora Forge API key from developer.allora.network and get an Allora wallet secret mnemonic phrase. If you don’t have a wallet, you’ll get one automatically at the end of this walkthrough.

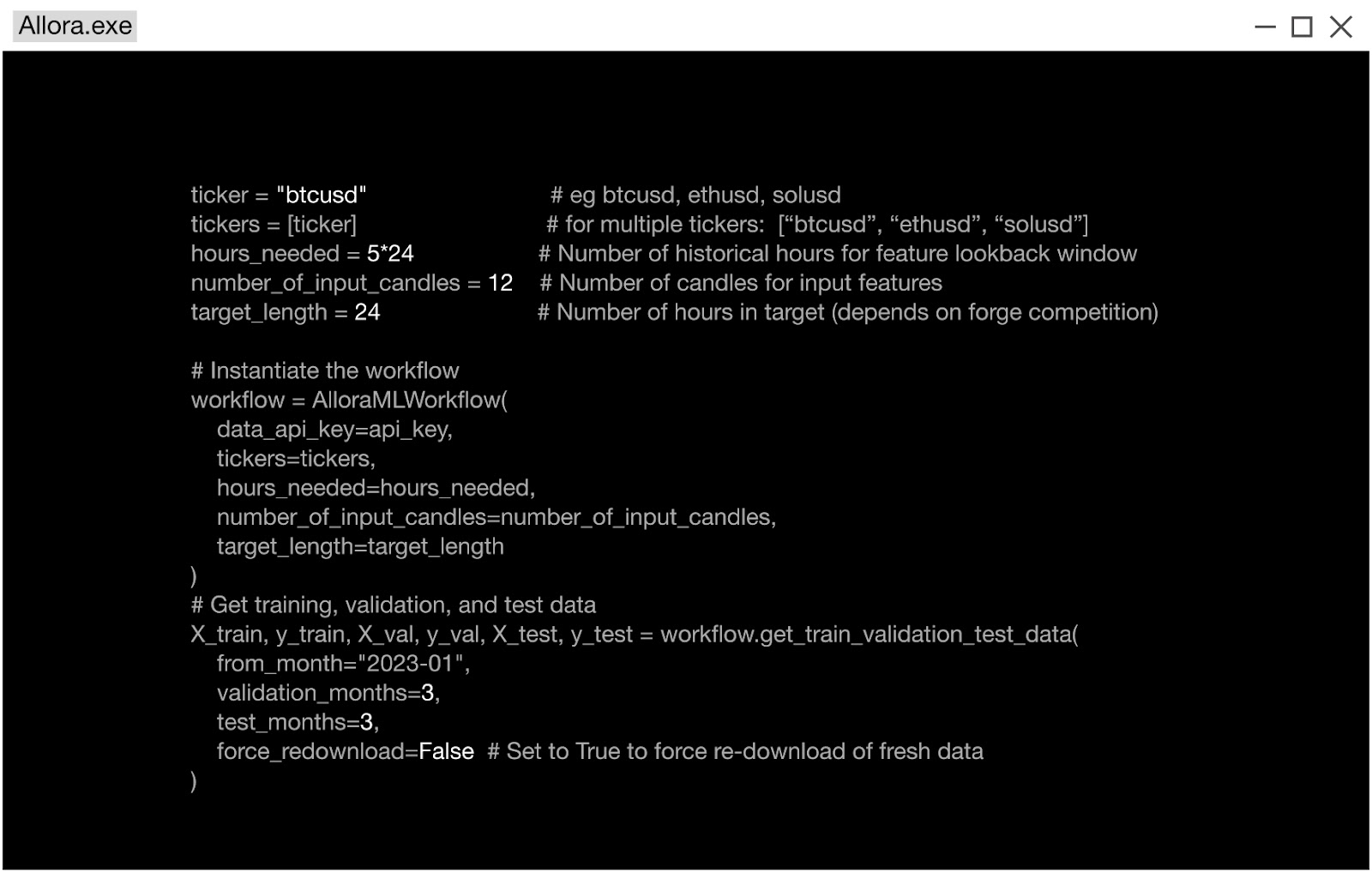

Step 1: Load Training and Validation Data

The kit fetches normalized candle data and targets, then automatically splits them into training and a six-month validation set.

There are a lot of important parameters here that will dictate the size and shape of the input data and the target. The amount of data in the validation and test set is also adjustable, as well as the earliest date in the data set. Currently our api supports data back to 2020.

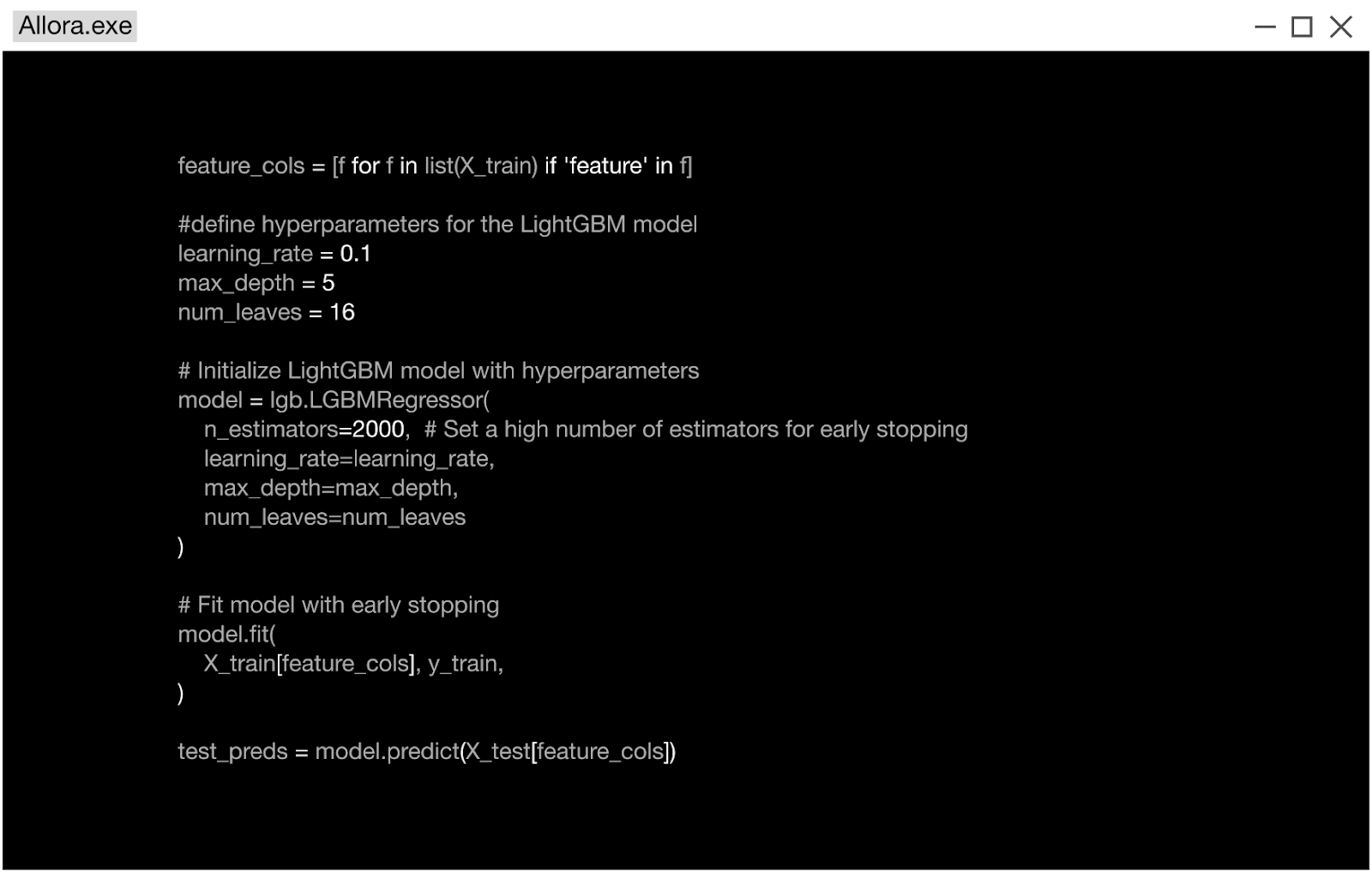

Step 2: Train a Model

With the training, validation, and test data sets, we can train and evaluate models in a supervised learning framework. In this notebook we pick a simple LightGBM model with the raw features. This is where you can explore ideas in feature engineering, ensembling, and experimenting with other models.

Fit the model on the training dataset. Hyperparameters such as num_leaves, learning_rate, and max_depth can be adjusted directly in the notebook. The training loop is preconfigured, requiring no additional setup.

Step 3: Evaluate on Recent Data

The workflow provides some built-in scoring metrics that will score the test data over the appropriate time frame of the data. The notebook reports Pearson correlation between predictions and targets, along with directional accuracy. These metrics mirror those used for live inference.

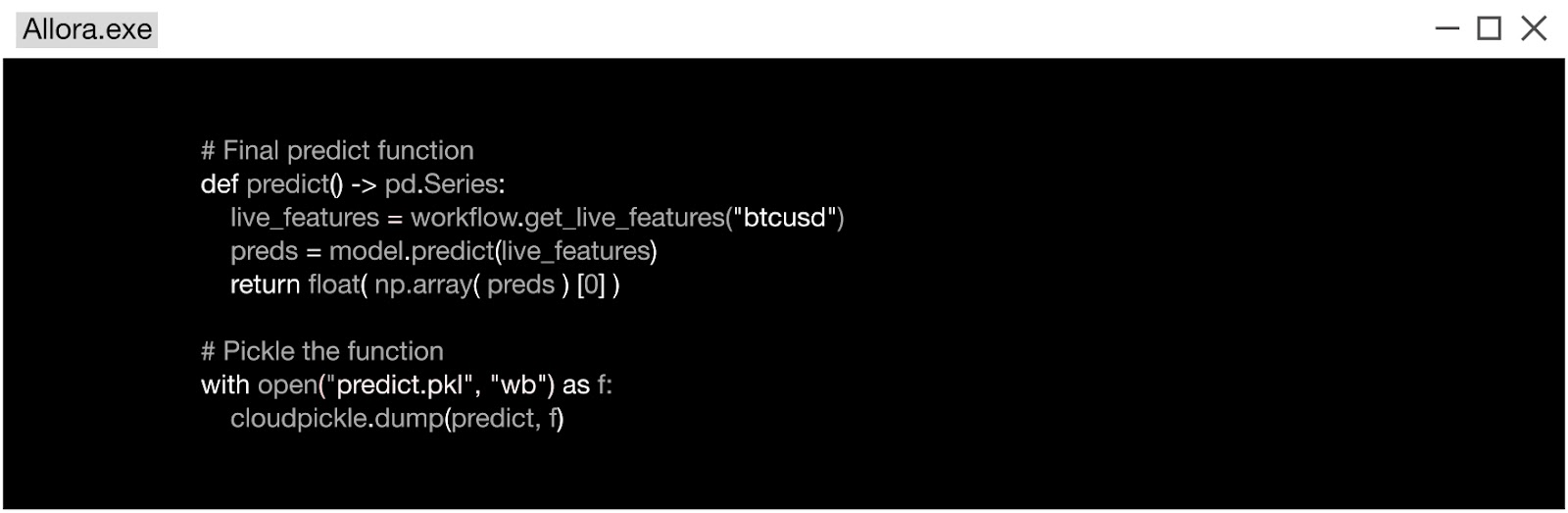

Step 4: Package the Predict Function

Export the trained model and predict logic into a single predict.pkl file. The predict function must accept a DataFrame of features and return predictions. This artifact is all that is required for deployment. Notice: this predict function is fully customizable. Replicate your feature engineering in this function. Combine predictions from several models here.

Step 5: Run the Worker Node

Launch a worker node using the packaged model. The worker then receives features from Allora in real time, applies the predict function, and begins streaming inferences back to the network.

In this step you will need to supply the mnemonic phrase for the wallet you want to use. If you don’t have a wallet yet, just hit ENTER when prompted, it will create a new one for you and save it to the .allora_key file. If this file is present (on your next run), the system will use the key file without prompting.

The default topic is number 69, which is an open topic and doesn’t require whitelisting. It corresponds to the BTC 24 hour topic. When you use this workflow for other topics, you can pass the `topic_id` argument to the worker.

Why It Matters

Every model does not just run in isolation, it strengthens the network. Allora’s Inference Synthesis mechanism coordinates contributions from thousands of models, aggregating them into a superior, objective-centric intelligence.

This Forge Builder Kit unifies several core parts to a machine learning workflow, that aim to get people modeling quickly, without worrying so much about data and submission infrastructure. With this notebook, you can concentrate on feature engineering and predictive modeling, the parts that we really need.

With the Forge Builder Kit, the first session success rate has already increased significantly. This lowers overhead for both new contributors and the core team. More importantly, it accelerates the pace at which fresh intelligence reaches Allora’s predictive feeds.

Start Building Today

The Forge Builder Kit is the fastest way to contribute intelligence to the Allora Network. Whether you are a quant, a DeFi strategist, or an ML engineer experimenting with your first Allora worker, you can now go from model to live inference in a matter of minutes.

Get the Forge Builder Kit on GitHub to start contributing to the Allora Network.

About the Allora Network

Allora is a self-improving decentralized AI network.

Allora is a self‑improving, decentralized Model Coordination Network (MCN). Instead of providing monolithic models, Allora dynamically coordinates and aggregates thousands of models to solve objective‑centric tasks. This approach enables the network to produce better intelligence than any single model yields on its own, creating a smarter, more secure intelligence that anyone can integrate.

To learn more about Allora Network, visit the Allora website, X, Blog, Discord, Research Hub, and developer docs.